Docker vs Virtual Machines

Table of contents

Before moving ahead with Docker, first we need to know why we need Docker, when there is a Virtual Machine?

What is Virtual Machine?

A Virtual Machine, commonly shortened to just VM, is no different than any other physical computer like a laptop, smart phone, or server. It has a CPU, memory, disks to store your files, and can connect to the internet if needed.

How does a virtual machine work?

Virtualization is the process of creating a software-based, or "virtual" version of a computer, with dedicated amounts of CPU, memory, and storage that are "borrowed" from a physical host computer, such as your personal computer and/or a remote server.

A virtual machine is a computer file, typically called an image, that behaves like an actual computer. It can run in a window as a separate computing environment, often to run a different operating system or even to function as the user's entire computer experience as is common on many people's work computers.

The virtual machine is partitioned from the rest of the system, meaning that the software inside a VM can't interfere with the host computer's primary operating system.

Hypervisor is a service that allows us to run multiple OS.

Running multiple virtual machines on one physical machine can result in unstable performance if infrastructure requirements are not met.

Virtual machines are less efficient and run slower than a full physical computer.

As VM has its own OS and Kernel, it uses lot of resources which lead to the wastage of those resources. Also, the disk space are not utilized completely by the microservices running in the VM.

DOCKER

Docker is a lightweight, open-source containerization platform, written in "Go" language which allows developers to package app into containers that can be easily shared or deployed across different environments.

Containers are lightweight and is loosely isolated environment and contain everything needed to run the application, so we do not use need to rely on what is currently installed on the host.

Also, we need not to pre-allocate any RAM or disk space, it will take the RAM or disk space according to its requirement, i.e., no wastage of resources.

You can also think of Containers as a running instances of images that are isolated and have their own environments and set of processes.

Images is a package or read-only template which is used to create one or more containers.

Why Docker Over VMs?

Docker is often preferred over traditional Virtual Machines (VMs) for several reasons:

Resource Efficiency:

Docker containers share the host OS kernel, which reduces the overhead compared to VMs that require a full OS for each instance.

Containers can start up and scale much faster than VMs due to their lightweight nature.

Isolation with Less Overhead:

While VMs provide strong isolation by emulating entire operating systems, Docker containers offer a good balance between isolation and resource efficiency.

Containers isolate application processes, filesystems, and network, without the need to emulate a complete OS.

Portability:

- Docker containers encapsulate applications and their dependencies, ensuring consistency across different environments. This portability simplifies the deployment process, making it easier to move applications between development, testing, and production environments.

Ease of Deployment and Orchestration:

Docker simplifies the deployment process with its containerization approach. Once an application and its dependencies are containerized, it can be deployed consistently across various environments.

Container orchestration tools like Kubernetes work seamlessly with Docker, providing powerful tools for managing and scaling containerized applications.

Resource Utilization:

Docker allows for better utilization of system resources since it doesn't require the duplication of an entire operating system for each application instance.

Multiple containers can run on a single host, optimizing resource consumption.

Development Efficiency:

Developers can define the application environment in a Dockerfile, ensuring that the application runs consistently across different development machines and environments.

Docker's layering system allows for incremental updates, making the development and deployment processes more efficient.

Community and Ecosystem:

Docker has a large and active community, resulting in a rich ecosystem of pre-built images and tools that facilitate the containerization process.

Many third-party applications and services integrate seamlessly with Docker.

Why do we need Docker?

We need Docker because of an incompatibility issue which generally happens when our software dependencies are not fulfilled in the client system, like the libraries, framework and other OS configuration we used is not matched with the one in the client system.

Docker takes care of all the dependencies and configuration of any software and package them into a Docker Container, which makes our software portable and provides the same environment to our software as it was when we created it and which ensures that it will run on any computer despite of different configurations, OS types, etc. which will result in fast and consistent delivery of applications.

Installing Docker

To install Docker, refer to Installation .

Docker Architecture

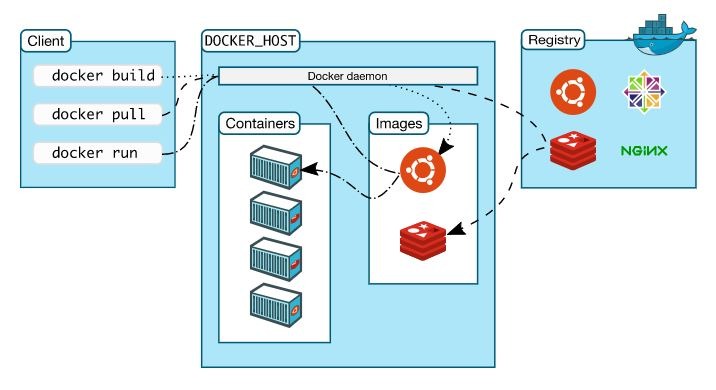

Docker uses client-server architecture.

Docker Engine is a service which allows you to run any container be it Linux, Windows, MacOS or any kind of host OS, with Docker installed on it.

Docker Client: It is the primary way that may Docker users interact with Docker.

Example: When we use "docker run" command, the client sends this command to the Docker Daemon, which carries them out. The docker command uses the Docker API. The Docker client can communicate with more than one daemon.

Docker Registry: It is a central repository for all the Docker images where all the images are stored. Docker Hub is the public registry that anyone can use and docker is configured to look for images on Docker Hub by default.

When we install Docker, we actual install 3 components, i.e.,

Docker Daemon/Docker d: is the background process that manages docker objects such as the images, containers, volumes, etc.

Docker CLI: is the command line interface that we use to perform actions such as running a container, stopping a container, etc. It uses REST API to interact with the Docker Daemon.

Docker REST API Server: is the API interface that programs can use to the daemon and provide instruction.

The Docker Client and Docker Daemon can run on the same system, or we can connect a Docker Client to a remote Docker Daemon.

Docker Commands

docker run

docker run <imagename> -> To run a container from an image. docker run -d <imagename> -> To run a container in detach mode. docker run -e <var_name>=<value> <appname> -> To set env variable. docker run <imagename>:<tag> -> To run containers with different versions of an image. NOTE: If we do not specify the tag, "latest" will be the default one.docker pull

docker pull <imagename> -> To download the image from Docker Hub and store it on our host.docker ps

docker ps -> Lists the running containers and some basic info about them such as container_id, name of the image, name of the container, current status. docker ps -a -> Lists all the containers whether it is running or stopped or exited.docker start

docker start <container_id> -> To start the containerdocker stop

docker stop <container_id> -> To stop a containerdocker inspect

docker inspect <container_id> -> returns all the details of a container in a json format. docker inspect <imagename> -> returns all the details of an image.docker images

docker images -> Lists all the available images and their size on the hostdocker rm

docker rm <container_id> -> To remove a stopped or exited container.docker rmi

docker rmi <imagename> -> To remove an image that we no longer plan to use.docker exec

docker exec -it <container_id> sh ->To get inside the containerdocker logs

docker logs <container_id> -> To view the logs of a container we run in the background.docker port

docker run -p <docker_host port>:<docker_container port> appname -> To list the port mappings for a container.docker stats

docker stats <container_id> -> To view resource usage statistics for one or more containers.docker top

docker top <container_id> -> To view the processes running inside a container.docker save

docker save <imagename> > <imagename.tar> -> To save an image to a tar archive. docker save --output <imagename.tar> <imagename> docker save -o <imagename.tar> <imagename> docker save -o <imagename.tar> <imagename>:<tag>docker load

docker load --input <imagename.tar> -> To load an image from a tar archive. docker load < <imagename.tar> NOTE: It restore both images and tags.

CONCLUSION

In conclusion, Virtual Machines(VMs) and Docker serve distinct but complementary purposes in the realm of virtualization and containerization.

VMs offer full isolation and emulate entire operating systems, making them versatile but resource-intensive. Docker, on the other hand, utilizes lightweight containers that share the host OS kernel, leading to greater efficiency and faster deployment.

Virtual Machines are suitable for scenarios requiring strong isolation and compatibility with diverse operating systems, making them ideal for running multiple applications with different OS requirements on a single host. However, they come with higher resource overhead.

Docker excels in lightweight, portable containerization, streamlining application deployment and scalability. Its efficiency and fast startup times make it well-suited for microservices architectures and cloud-native applications.