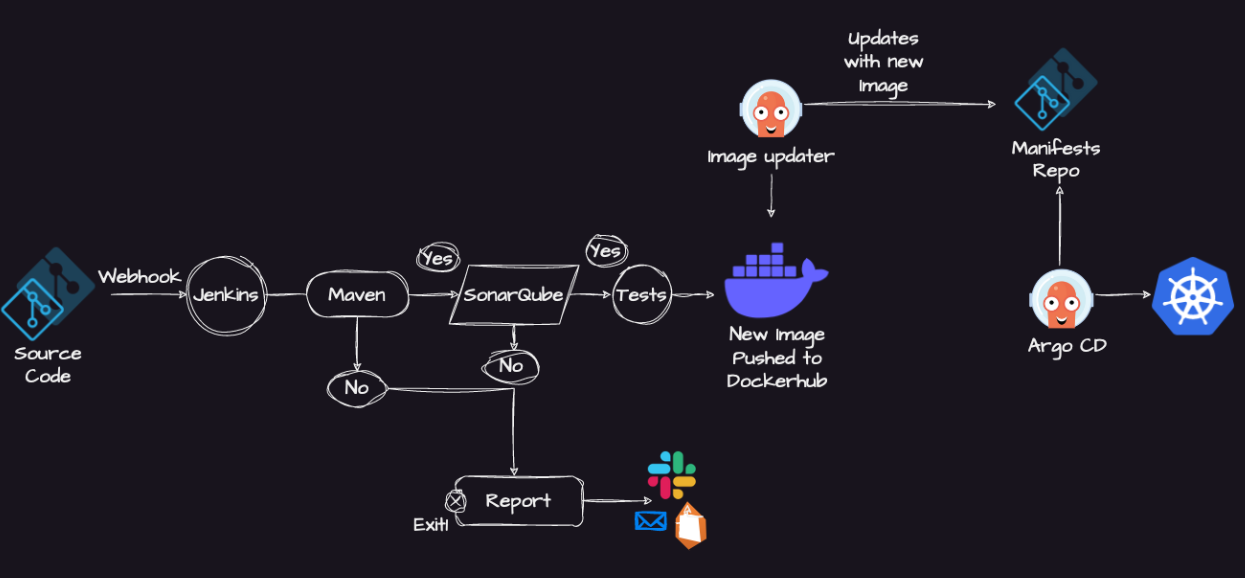

Java Application CI/CD Pipeline with Maven, Jenkins, SonarQube, Docker, Argo CD and Kubernetes

The project involves building and deploying a Java application using a CI/CD pipeline.

Version Control: The code is stored in a version control system such as Git, and hosted on GitHub. The code is organized into branches such as the main or development branch.

Continuous Integration: Jenkins is used as the CI server to build the application. Whenever there is a new code commit, Jenkins automatically pulls the code from GitHub, builds it using Maven, and runs automated tests. If the tests fail, the build is marked as failed and the team is notified.

Code Quality: SonarQube is used to analyze the code and report on code quality issues such as bugs, vulnerabilities, and code smells. The SonarQube analysis is triggered as part of the Jenkins build pipeline.

Containerization: Docker is used to containerizing the Java application. The Dockerfile is stored in the Git repository along with the source code. The Dockerfile specifies the environment and dependencies required to run the application.

Container Registry: The Docker image is pushed to DockerHub, a public or private Docker registry. The Docker image can be versioned and tagged for easy identification.

Overall, this project demonstrates how to integrate various tools commonly used in software development to streamline the development process, improve code quality, and automate deployment.

Setup an AWS EC2 Instance

Login to an AWS account using a user with admin privileges and ensure your region is set to us-east-1 N. Virginia. Move to the EC2 console. Click Launch Instance.

For name use Jenkins-ArgoCD-SonarQube-k8s-Server

Select AMIs as Ubuntu and select Instance Type as t3.medium. Create new Key Pair and Create a new Security Group with traffic allowed from ssh, http and https.

Run Java application on EC2

This step is optional. We want to see which application we wanted to deploy on the Kubernetes cluster.

This is a simple Spring Boot-based Java application that can be built using Maven.

git clone https://github.com/nahidkishore/Jenkins-Zero-To-Hero.git

cd Jenkins-Zero-To-Hero/java-maven-sonar-argocd-helm-k8s/spring-boot-app

sudo apt update

sudo apt install maven -y

mvn clean package

mvn -v

sudo apt update

sudo apt install docker.io -y

sudo usermod -aG docker ubuntu

sudo chmod 666 /var/run/docker.sock

sudo systemctl restart docker

docker build . -t ultimate-cicd-pipeline:v1

docker run -d -p 8010:8080 -t ultimate-cicd-pipeline:v1

Add Security inbound rule for port 8010

Now we are going to deploy this application on Kubernetes by CICD pipeline.

Continuous Integration

Install and Setup Jenkins

Follow the steps for installing Jenkins on the EC2 instance.

sudo apt update

sudo apt install openjdk-17-jre -y

java -version

curl -fsSL https://pkg.jenkins.io/debian/jenkins.io-2023.key | sudo tee \

/usr/share/keyrings/jenkins-keyring.asc > /dev/null

echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] \

https://pkg.jenkins.io/debian binary/ | sudo tee \

/etc/apt/sources.list.d/jenkins.list > /dev/null

sudo apt-get update

sudo apt-get install jenkins -y

sudo systemctl start jenkins

sudo systemctl enable jenkins

You can get the ec2-instance-public-ip-address from your AWS EC2 console page.

Edit the inbound traffic rule to only allow custom TCP port 8080

http://:<ec2-instance-public-ip-address>8080.

sudo cat /var/lib/jenkins/secrets/initialAdminPassword

After completing the installation of the suggested plugin you need to set the User for Jenkins.

Click Save and Continue.

Click Save and Finish.

And now your Jenkins is ready for use

Start Using Jenkins.

Create a new Jenkins pipeline

Github url: https://github.com/nahidkishore/Jenkins-Zero-To-Hero.git

Click on New Item. Select Pipeline and Enter an Item name as Ultimate-demo and click OK.

Select your repository where your Java application code is present.

Make the branch as main, since ours is main instead of master and add the Script Path from Github Repo

Install the Docker Pipeline and SonarQube Plugins

Install the following Plugins in Jenkins

Goto Dashboard → Manage Jenkins →Plugins →Available Plugins →

Docker Pipeline Plugin

SonarQube Scanner

Now Click on Install without Restart

Configure a Sonar Server locally

SonarQube is used as part of the build process (Continuous Integration and Continuous Delivery) in all Java services to ensure high-quality code and remove bugs that can be found during static analysis.

Goto your EC2 Instance and enter these commands to configure Sonar Server

sudo adduser sonarqube

<Enter any password when it prompts you>

sudo apt install unzip

sudo su - sonarqube

When you enter sudo su — sonarqube, you will switch user to sonarqube and then install the required binaries.

wget https://binaries.sonarsource.com/Distribution/sonarqube/sonarqube-9.4.0.54424.zip

unzip *

chmod -R 755 /home/sonarqube/sonarqube-9.4.0.54424

chown -R sonarqube:sonarqube /home/sonarqube/sonarqube-9.4.0.54424

cd sonarqube-9.4.0.54424/bin/linux-x86-64/

./sonar.sh start

By default, the Sonar Server will start on Port 9000. Hence we will need to edit the inbound rule to allow custom TCP Port 9000.

Enter Login as admin and password as admin.

Change with a new password

Create SonarQUbe Credential in Jenkins

Goto Sonar Qube → My Account → Click on Security → Write Jenkins and click on Generate

Next, goto your Jenkins → Manage Jenkins → Manage Credentials →System →Global Credentials → Add Credentials →

Create DockerHub Credential in Jenkins

Run the below commands as root user to install Docker

sudo su -

sudo apt update

sudo apt install docker.io -y

sudo usermod -aG docker $USER

sudo usermod -aG docker jenkins

sudo systemctl restart docker

Once you have done these, its a best practice to restart Jenkins

sudo systemctl restart jenkins

Goto -> Jenkins -> Manage Jenkins -> Manage Credentials -> Stored scoped to jenkins -> global -> Add Credentials

Create GitHub credential in Jenkins

Goto GitHub — > Setting — > Developer Settings — > Personal access tokens — > Tokens(Classic) — > Generate new token

Now, you can see all three credentials have been added

Now, when you click build now.

Let's look at the deployment.yml files, you will see that the file has been recently updated.

If we go to the Sonarqube console, you will see this output

deployement.yml file gets updated with the latest image.

Check DockerHub, that a new image is created for your Java application.

This way, we completed the Continuous Integration Java application, SonarQube completes static code analysis and the latest image is created, pushed to DockerHub and updated the Manifest repository with the latest image.

Continuous Delivery/Deployment Part(Using GitOps Tool Argo CD)

ArgoCD is utilized in Kubernetes to establish a completely automated continuous delivery pipeline for the configuration of Kubernetes. This tool follows the GitOps approach and operates in a declarative manner to deliver Kubernetes deployments seamlessly.

Launch an AWS EC2 Medium Instance

Goto your AWS Console and log in using Admin Privileges. Select Ubuntu Image and T3 Medium Instance. Enable HTTP and HTTPS Settings. Use an existing key pair or you can create a new one. You can name your EC2 Instance as Deployment-Server. Now, click on launch instance.

Install AWS CLI and Configure

Login to your console and enter these commands

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

sudo apt install unzip

unzip awscliv2.zip

sudo ./aws/install

aws --version

You will see that AWS CLI is now installed

Now, you will need to go to the top right corner of your AWS Account and click on Security Credentials. Generate Access key and Secret Access key.

Goto Access Keys → Create Access Keys →Download CSV File. Remember to download the CSV File so that it can be in your downloads section.

Now, go to your AWS Console login, and type below command

aws configure

You will need to enter the details

Install and setup Kubectl

Kubectl is a command-line interface (CLI) tool that is used to interact with Kubernetes clusters. It allows users to deploy, inspect, and manage Kubernetes resources such as pods, deployments, services, and more. Kubectl enables users to perform operations such as creating, updating, deleting, and scaling Kubernetes resources.

Run the following steps to install kubectl on EC2 instance.

curl -LO "https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl"

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin

kubectl version

The output would look like this

Install and setup eksctl

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

sudo mv /tmp/eksctl /usr/local/bin

eksctl version

Download and extract the latest release of eksctl 👍

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

Move the extracted binary to /usr/local/bin

sudo mv /tmp/eksctl /usr/local/bin

Test that your installation was successful with the following command

eksctl version

The output would look like this:

Install Helm Chart

The next tool we need is Helm Chart. Helm is a package manager for Kubernetes, an open-source container orchestration platform. Helm helps you manage Kubernetes applications by making it easy to install, update, and delete them.

Use the following script to install the helm chart -

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

helm version

Verify Helm Chart installation

This way we install all AWS CLI, kubectl, eksctl and Helm.

Creating an Amazon EKS cluster using eksctl

Now in this step, we are going to create Amazon EKS cluster using eksctl

You need the following in order to run the eksctl command

Name of the cluster : — eks1

Version of Kubernetes : — version 1.24

Region : — region us-east-1

Nodegroup name/worker nodes : — nodegroup-name worker-nodes

Node Type : — nodegroup-type t3.medium

Number of nodes: — nodes 2

Minimum Number of nodes: — nodes-min 2

Maximum Number of nodes: — nodes-max 3

eksctl create cluster --name eks1 --version 1.24 --region us-east-1 --nodegroup-name worker-nodes --node-type t3.medium --nodes 2 --nodes-min 2 --nodes-max 3

It took me 20 minutes to complete this EKS cluster. If you get any error for not having sufficient data for the mentioned availability zone then try it again.

Now, when you goto your AWS Console, you will see the EKS and Worker Nodes created under Compute

Set up IAM Role for Service Accounts

The controller runs on the worker nodes, so it needs access to the AWS ALB/NLB resources via IAM permissions. The IAM permissions can either be setup via IAM roles for ServiceAccount or can be attached directly to the worker node IAM roles.

Create IAM OIDC provider

eksctl utils associate-iam-oidc-provider --cluster eks1 --approve

Download IAM policy for the AWS Load Balancer Controller

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/install/iam_policy.json

Create IAM Policy

aws iam create-policy \

--policy-name AWSLoadBalancerControllerIAMPolicy-1 \

--policy-document file://iam_policy.json

Create an IAM role and ServiceAccount for the AWS Load Balancer controller using eksctl tool

eksctl create iamserviceaccount \

--cluster=eks1 \

--namespace=kube-system \

--name=aws-load-balancer-controller \

--role-name AmazonEKSLoadBalancerControllerRole \

--attach-policy-arn=arn:aws:iam::862634182199:policy/AWSLoadBalancerControllerIAMPolicy-1 \

--approve \

--override-existing-serviceaccounts

Deploy ALB controller

Install the helm chart by specifying the chart values

helm repo add eks https://aws.github.io/eks-charts

#Update the repo

helm repo update eks

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system \

--set clusterName=eks1 \

--set serviceAccount.create=false \

--set serviceAccount.name=aws-load-balancer-controller \

--set region=us-east-1 \

--set vpcId=vpc-067934f0fec61c25f

Verify that the AWS Load Balancer controller is installed or not.

kubectl get deployment -n kube-system aws-load-balancer-controller

Install Argo CD operator

ArgoCD is a widely-used GitOps continuous delivery tool that automates application deployment and management on Kubernetes clusters, leveraging Git repositories as the source of truth. It offers a web-based UI and a CLI for managing deployments, and it integrates with other tools. ArgoCD streamlines the deployment process on Kubernetes clusters and is a popular tool in the Kubernetes ecosystem.

You can refer to this URL https://argo-cd.readthedocs.io/en/stable/

The Argo CD Operator manages the full life cycle of Argo CD and its components.

curl -sL https://github.com/operator-framework/operator-lifecycle-manager/releases/download/v0.24.0/install.sh | bash -s v0.24.0

kubectl create -f https://operatorhub.io/install/argocd-operator.yaml

kubectl get csv -n operators

kubectl get pods -n operators

Goto URL https://argocd-operator.readthedocs.io/en/latest/usage/basics/

The following example shows the most minimal valid manifest to create a new Argo CD cluster with the default configuration.

Create argocd-basic.yml with the following content.

apiVersion: argoproj.io/v1alpha1

kind: ArgoCD

metadata:

name: example-argocd

labels:

example: basic

spec: {}

using these below commands you can get the details

kubectl apply -f argocd-basic.yml

kubectl get pods

kubectl get svc

kubectl edit svc example-argocd-server

kubectl get secret

the output would look like this

LoadBalancer services are useful for exposing pods to external traffic where clients have network access to the Kubernetes nodes.

Change the spec.type from ClusterIP to LoadBalancer. Save it.

kubectl edit svc example-argocd-server

output would look like this

Password for Argo CD

Next, we need to get password for our Argo CD Operator

kubectl get secret

kubectl edit secret example-argocd-cluster

Copy admin password and decrypt it.

echo <admin.password> | base64 -d

Deploy a sample application

Take the LoadBalancer link and open it in your browser. Click on Advanced ==> then click on the bottom link.

Username: admin

Password: jqJSglYPdrhs6EVokA3Z8wDnLbp15XxU

We will use the Argo CD web interface to run sprint-boot-app.

Set up the Github Repository manifest and Kubernetes cluster.

Enter details for your Deployment repository.

Application Name: demo-app

Project Name: default

SYNC POLICY: Automatic

Repository URL: https://github.com/nahidkishore/Jenkins-Zero-To-Hero

Path: java-maven-sonar-argocd-helm-k8s/spring-boot-app-manifests

Cluster URL: https://kubernetes.default.svc

Namespace: kube-system

After Create. You can check if pods are running for sprint-boot-app

You have now successfully deployed an application using Argo CD.

Argo CD is a Kubernetes controller, responsible for continuously monitoring all running applications and comparing their live state to the desired state specified in the Git repository.

Clean UP

In this stage, you're going to clean up and remove all resources which we created during the session. So that it will not be charged to you afterward.

Delete the EKS cluster with the following command.

eksctl delete cluster --name eks1

Thank you for reading this blog. If you found this blog helpful, please like, share, and follow me for more blog posts like this in the future.

— Happy Learning !!!